- Romance

- November 12, 2025

Table of Contents

It often begins with a warm message, a compliment, or a flawless photo on a dating site. Everything seems right; the pictures look genuine, and the conversation flows. But what if that face didn’t really exist? What if it were generated by software instead of a person?

Today’s catfishing scams use AI to create fake identities that look real in every way. With AI-generated faces and convincing backstories, scammers trick people into trusting someone who doesn’t actually exist. That trust is often what leads to emotional harm and financial loss.

The fallout is more than just money gone. It is common for the victims to question their judgment, to be in a state of shock, and to doubt the character of people online. The situation is even worse with the rise of deepfake and AI scam technology that produces fake images and videos very easily, thus, the line between the real and the fake gets more and more indistinct.

In this manual, we will take you through the process of AI catfishing, the signs of a fake profile, and the steps you can take to safeguard yourself, as well as to get back your money if you have already become a victim.

To see how this digital deception got so advanced, let’s look back at where it began.

What Is Catfishing?

Before artificial intelligence was a part of the conversation, catfishing was simply a person creating a fake identity online to ambush someone emotionally or financially. In the early 2010s, this generally meant taking stolen pictures, lying about a backstory, and exchanging long messages via social media platforms or dating applications.

Now we find ourselves in the 2020s, and everything is different. Modern-fashioned catfishing is more than just lying; it is about building a believable human.

Scammers are using AI-generated faces, voice generative synthesis, and chatbots that you would never guess were not real-time conversations. Instead of concocting a couple of different fake accounts by hand, scammers can now create hundreds of fake accounts that all look perfectly real and have unique faces, interests, and digital habit patterns that all appear real.

|

Before AI (2010s) |

After AI (2020s) |

|

Stolen photos from real users |

AI-generated, never-before-seen faces |

|

Manual messaging & emotional manipulation |

Automated chatbots simulating empathy |

|

Simple stories or excuses |

Complex backstories supported by digital traces |

|

Easy to verify through video calls |

Deepfakes & audio clones hide the truth |

The line between a fake profile and a real person is blurring, and in the age of AI deception, trust itself has become a target. Once you understand the shift from old-school catfishing to AI-powered scams, let's see how AI makes fake identities and uses them to scam people.

How AI Creates Fake Faces and Identities?

Have you ever encountered a profile photo that seems so artificial that it seems it can't be real? Well, it might be. A large number of fake accounts these days rely on AI to create identifiably fake faces instead of using actual photos of people.

Here's how it works: AI can learn from real human faces. It examines hundreds to thousands of face photos and creates entirely new images that belong to no one. AI artificially creates fake faces, for instance, with GANs (Generative Adversarial Networks) or diffusion models. The results can appear astonishingly realistic, with the lighting appearing appropriate, natural smiles, and even flaws that seem human.

Experts say that about one-fourth of fake profiles now include AI-generated photos. This means a reverse image search would do you no good; it hasn't been used anywhere on the internet, as it doesn't exist at all.

Now that we know how these profiles work, let's understand why they effectively work and why people fall prey to them.

Why AI Catfishing Works?

AI makes scams easier in three ways:

- Scale: Thousands of fake profiles can be made in minutes.

- Realism: Faces and stories feel believable.

- Emotion: Messages sound caring or romantic but come from chatbots, not people.

Modern catfishing isn’t just about fake photos anymore; it’s about fake people, built by AI to earn your trust and take your money. Knowing why these scams work is only half the story. To truly protect yourself, you need to see how they unfold, step by step.

How Modern Catfish Scams Work Step by Step

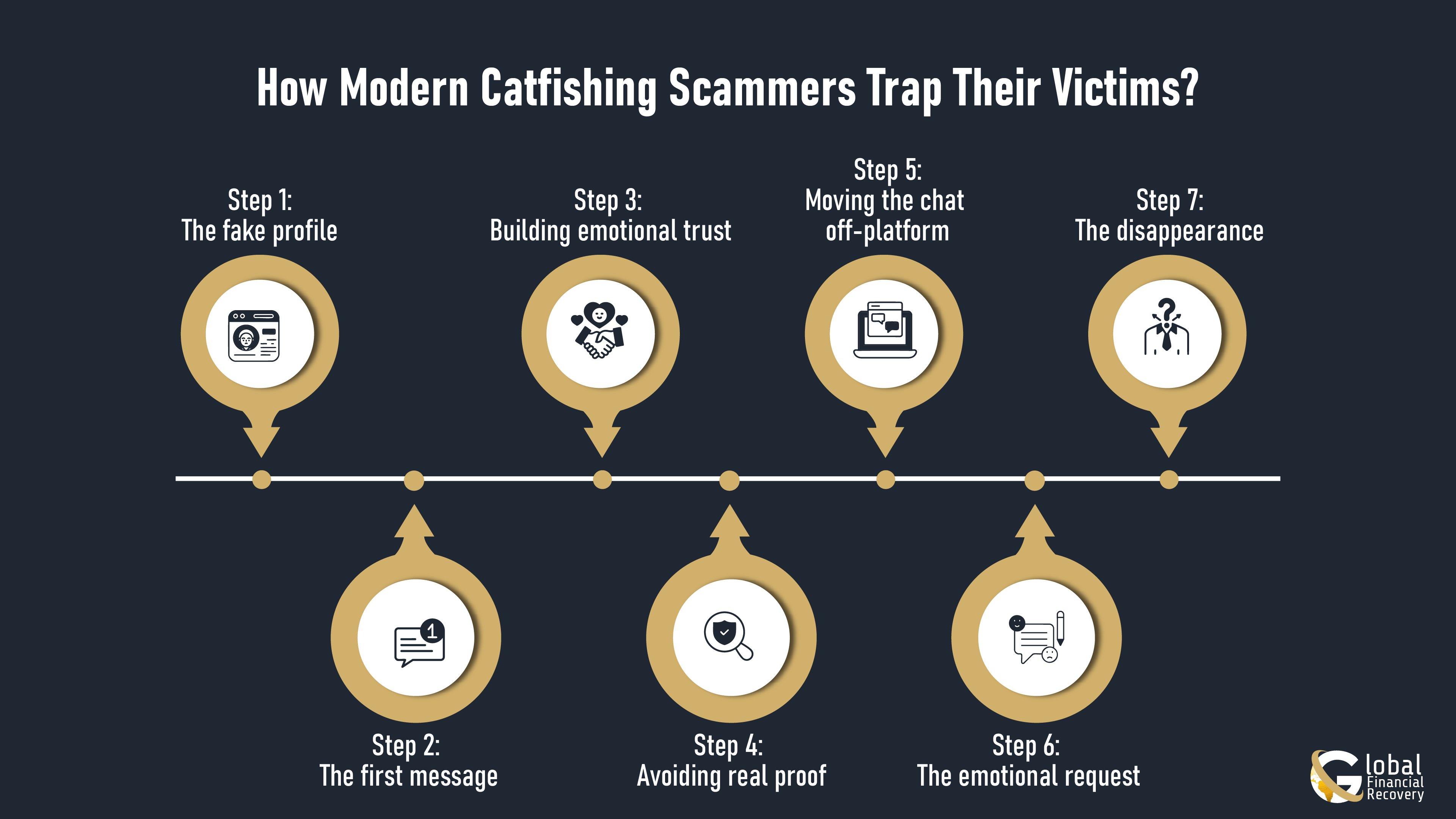

Most AI-catfishing scams follow a pattern. It may look harmless at first, but every move is designed to build trust and then break it.

These scams work because they feel legitimate and personalized. Here's how they usually play out:

Step 1: The Fake Profile

The scam starts with an appealing photo and a carefully written bio. These images are often AI-generated faces, not stolen pictures. They look natural, have perfect lighting, warm smiles, and nothing to raise suspicion.

Step 2: The First Message

You might get a simple “Hi, I saw your profile” or “You seem nice.” It feels genuine because it’s friendly and consistent with your interests.

Step 3: Building Emotional Trust

The scammer chats daily, remembers details, and offers comfort or compliments to the victim. Soon, they become part of your routine. That’s how emotional fraud begins. They use empathy and consistency to lower your guard.

Step 4: Avoiding Real Proof

When you suggest a video call or ask for another photo, they always have a reason: “My camera’s broken,” “I’m in a poor signal area.” It sounds believable, but it’s a way to avoid exposing the fake identity.

Step 5: Moving the Chat Off-Platform

They quickly push to private messaging apps like WhatsApp or Telegram.

Private channels give them more control, fewer platform checks, and fewer traces.

Step 6: The Emotional Request

Once trust is built, they come up with a fake story, like a family illness, business loss, or urgent financial need. Then comes the ask, usually for crypto transfers, wire payments, or gift cards.

Step 7: The Disappearance

After the money is sent, contact stops. Sometimes they reappear with a new excuse, or the account simply vanishes.

“Good morning, beautiful.”

“Can we FaceTime later?”

“Sorry, my camera’s still not working, but I miss you.”

By the time victims realize, both their hearts and wallets are hurt.

Common targets: older adults, people seeking companionship, or anyone going through emotional stress. Awareness is power. Here’s how you can identify fake profiles early and protect yourself.

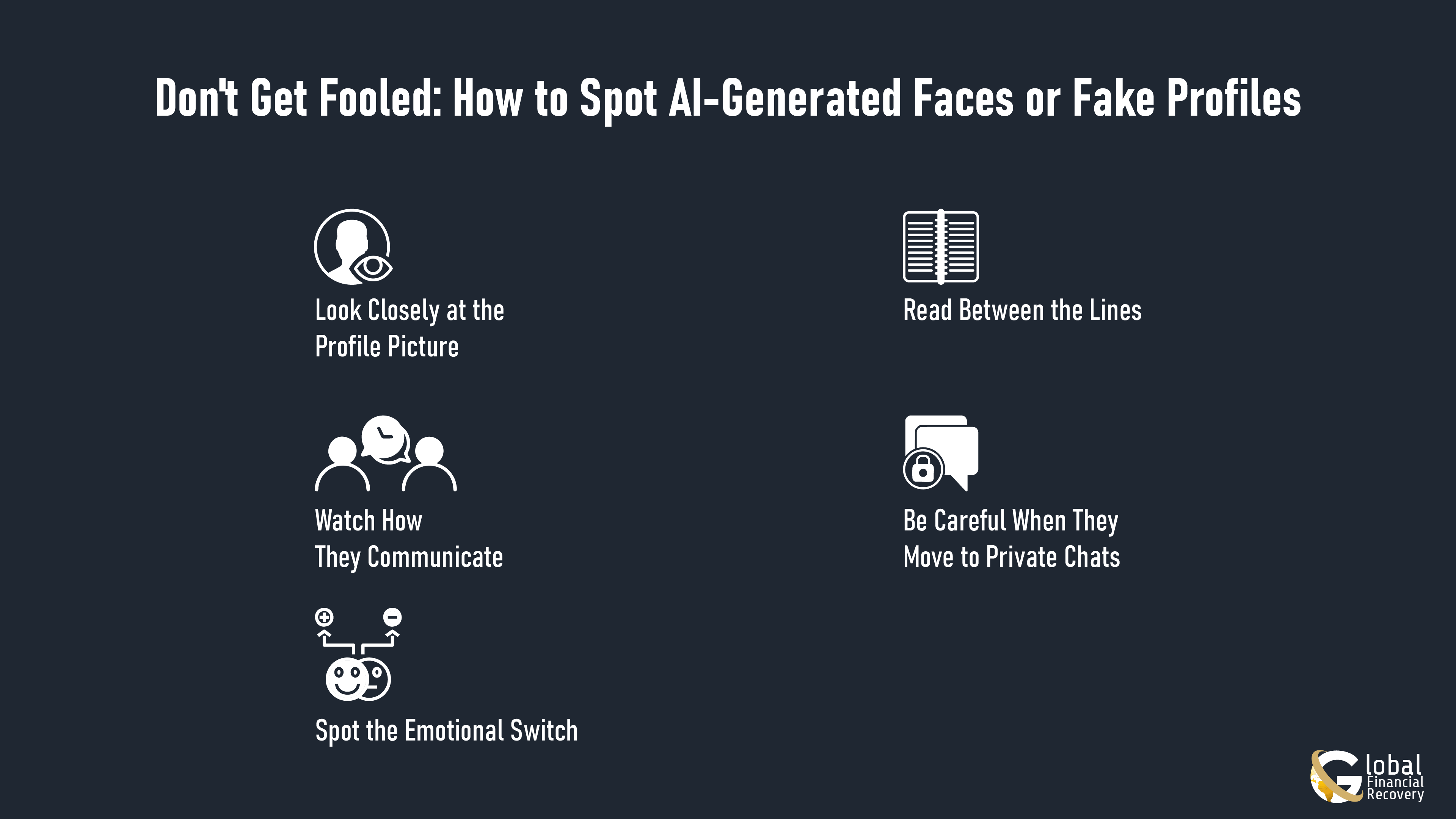

How to Spot an AI-Generated Face or Fake Profile?

Fake profiles have become harder to detect. With AI, scammers can create faces that look real, write convincing bios, and chat like genuine people. But no matter how advanced the scam, small clues always give them away, and spotting them early can protect both your heart and your wallet.

Spotting imposter scams early can save you serious money and stress. Watch for these red flags:

1. Look Closely at the Profile Picture

AI photos often have tiny mistakes, mismatched earrings, blurry backgrounds, uneven lighting, or strange eyes. These are signs the image isn’t real.

Noticing such details early helps you pause before getting attached. A quick check with Google Lens or TinEye can reveal if the same photo appears elsewhere online.

2. Read Between the Lines

Fake profiles look polished but hollow. You’ll often see one perfect photo, a short bio, and a few followers or posts. If everything feels too neat or generic, trust your gut; real people have messy, lived-in profiles. Recognizing this helps you avoid forming trust with someone who doesn’t exist.

3. Watch How They Communicate

Scammers often follow a pattern: making fast replies, offering too many compliments, and quickly establishing emotional bonding. They’ll avoid video calls with excuses like “camera’s broken” or “bad internet.”

When you notice these patterns, step back. Real people don’t hide their faces or voices when they genuinely care.

4. Be Careful When They Move to Private Chats

If they try to shift from the app to WhatsApp, Telegram, or email, it’s not about privacy; it’s to avoid getting caught. Staying on the platform keeps you safer and helps you report the account if things seem off.

5. Spot the Emotional Switch

After earning your trust, scammers often share a sudden emergency or “investment opportunity” and ask for help, money, crypto, or gift cards.

Recognizing this shift early helps you stop before the loss happens. Real relationships don’t come with payment requests.

Your Quick Catfish Detection Checklist

- Run their photo through Google Lens or TinEye.

- Zoom in, eyes, earrings, and background details often expose AI edits.

- Check their profile age and posts; real accounts have a history.

- Stay on-platform until identity is confirmed.

- Ask for a short video or live selfie.

- Use tools like Sensity.ai or AI Face Detector for image checks.

- If they ever mention money, stop right there.

If anything at all comes up about money, stop there. Following these steps will enable you to regain control, not just over your own digital safety, but your emotional safety as well. Every fake profile you notice early is one less opportunity for a scammer to succeed.

Maybe you recognized some red flags early in this situation; that is certainly helpful. But if you have been caught in a scam, do not panic, because recovery is possible and starts with taking immediate action.

What to Do If You’ve Been Targeted by Scammers?

If you've found out that someone online was not who they said they were, take a deep breath. You're not the only person this has happened to, and you are not at fault. Modern catfish scams with sophisticated scams often utilize advanced tools powered by AI and emotional manipulation to prey on almost anyone.

The most important thing now is to act quickly and stay safe.

Stop all communication right now. Block the person on every platform you connected with them on. Do not respond or confront them, which is exactly what they are hoping you will do.

Collect evidence and every other detail. Screenshot chats and emails, and save any payment information if you made payments. You may need these types of records to report the case or try to recoup any lost funds.

Report the fraudulent profile. Use the in-app reporting tool (when applicable) on each dating app or social media platform. You should also report to your local law enforcement or police, or the local cybercrime unit. The more reports law enforcement or an app company receives, the more likely they are to take down a similar fraudulent account.

Immediately secure all your accounts. Change the passwords to your email, social media, and any crypto wallets, if applicable. Use two-factor authentication to limit access from outsiders.

Reach out to scam recovery experts. If money or crypto was sent, professional fund recovery services can help trace digital transactions and support financial fraud recovery efforts.

Taking these quick steps reduces further damage and helps build a record for investigation.

Finally, give yourself some time. Being catfished doesn’t mean you were careless, it shows how advanced these scams have become. Even smart, careful people can fall for them. Next, let’s look at a real example of how AI-driven catfishing works.

Real-Life Example: The “Brad Pitt” AI Catfishing Scam

In January 2025, French woman Anne, aged 53, fell victim to an elaborate AI-generated celebrity catfishing scam. She believed she was in a private relationship with actor Brad Pitt, but the person behind the messages wasn’t real.

It began when “Pitt” contacted her on social media, sharing affectionate messages and fake AI-generated photos. Over time, the scammer claimed he was dealing with legal issues and asked for money to “release funds” and handle “medical expenses.”

Trusting him, Anne sent more than €830,000 (approximately $850,000) over several months to overseas accounts. The fake “Brad Pitt” vanished soon after receiving the final payment.

When Anne later ran a reverse image search, she discovered the photos were AI-generated composites and that she had been manipulated by a professional scam network using deepfake technology.

What This Case Teaches?

- AI can make fake identities look real, and even celebrities aren’t immune to impersonation.

- No real celebrity or public figure will ever ask for money or personal help online.

- Always verify before trusting, use reverse image tools, and video verification early.

- If you’ve sent money, report and contact fund recovery experts immediately to trace it.

Every scam story is different, but they all lead to one truth: staying informed is your best protection.

Staying Safe from AI Catfishing Scams

Realizing you’ve been targeted by a scam is hard, and that doesn’t mean you made a mistake. Scammers using synthetic identities and deepfake profiles are sophisticated by design. But reading this far shows you’re empowered to act.

At Global Financial Recovery, if you’ve been affected, our experts can help you trace lost funds and take your next step toward recovery.

FAQs (Frequently Asked Questions)

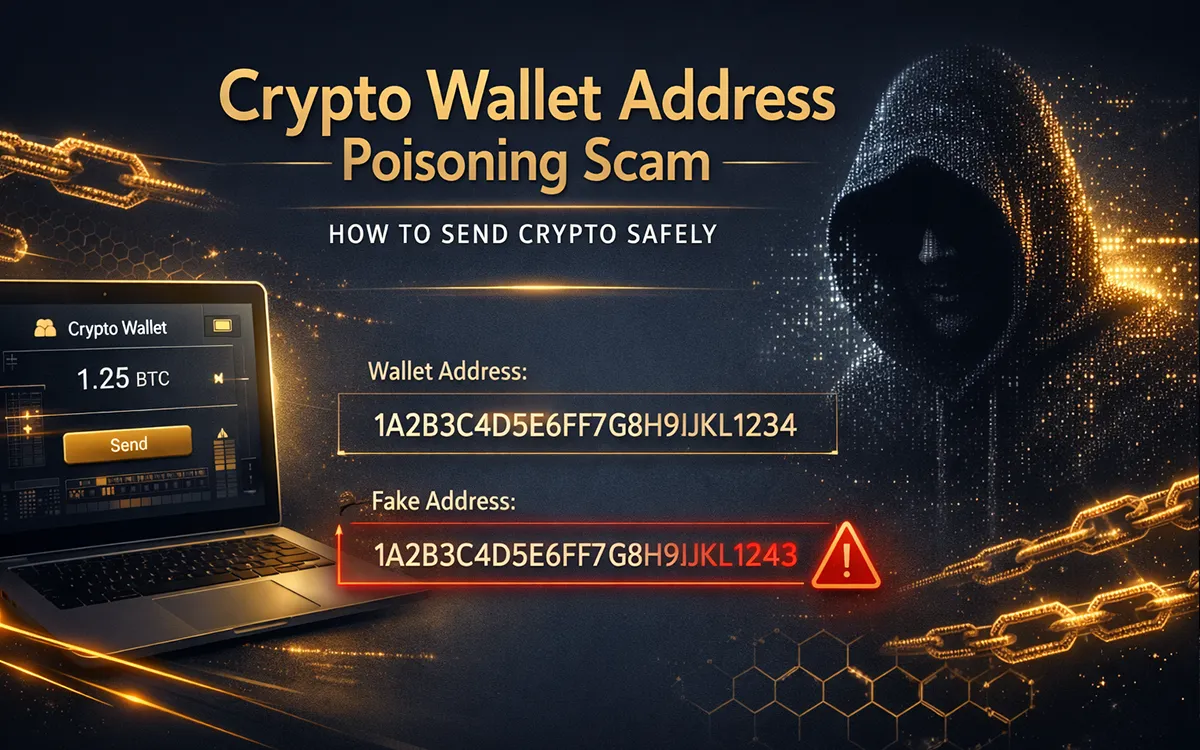

There are recovery agents that can use blockchain analytics tools like Chainalysis or TRM Labs to analyze the wallet activity. Agents can identify the exchange or service provider the wallet was associated with. After an exchange or service provider is identified, a formal request for cooperation can be presented under financial compliance or fraud-prevention laws.

Unfortunately, yes, if scammers obtained enough video or voice samples. However, legal and technical remedies exist. Victims can issue a digital impersonation notice, request content takedowns, and implement facial recognition monitoring to detect new deepfake appearances online.

After a scam, victims often appear on underground data lists sold between fraud groups. To prevent re-targeting:

- Change all passwords and recovery emails

- Enable 2FA across platforms

- Use privacy filters and limited friend requests

- Consider a digital identity shield service that monitors your online footprint for cloned accounts

Yes. A certified investigator could create a digital evidence report that would contain metadata, screenshots, transaction logs, and other technical indicators (like markers to validate the image was generated from a GAN or analysis of the chat patterns). This documentation helps build complaints with banks, exchanges, and other authorities.

In many cases, yes. AI-generated faces and voices are fake or synthetic, but people committing scams will oftentimes utilize the same device fingerprint, Internet Protocol (IP) addresses, or crypto wallet addresses on multiple scam attempts. As investigators, they can take these and other points of data and identify organized networks or repeat users operating under a new "skin."