- Investments

- February 5, 2026

Table of Contents

If you’ve ever received a strange call that sounded exactly like someone you love, you’re not alone. AI voice cloning scams are spreading fast in 2026, and criminals no longer need clever excuses; they only need a few seconds of your voice to create a perfect deepfake. These scams feel terrifyingly real because the person on the phone doesn’t just sound familiar; they sound exactly like your family member, your boss, or even your bank’s fraud officer.

What makes this new wave of deepfake voice scams so dangerous is how personal they are. Scammers now use AI to copy emotions, tone, and panic, creating emergency calls that feel impossible to question. And before you even have time to think, your money is gone.

This guide will break down how AI phone scams work, why they’ve become one of the fastest-growing threats in 2026, and most importantly, what you can do to protect yourself and your family.

What Is an AI Voice Cloning Scam?

An AI voice cloning scam is a high-end type of impersonation fraud in which scammers fake an artificial replica of the voice of an individual with the assistance of neural networks. Recent AI applications can use just a few seconds of audio and reproduce a voice that is similar to that of the speaker, complete with tone, pitch, rate, and even its emotional flavor.

After preparing the clone, attackers use it along with caller ID spoofing and social engineering techniques to prepare highly believable phone calls. These deepfake audio calls might claim there’s a bank problem, a legal emergency, a medical crisis, or an urgent work instruction.

What makes these synthetic voice attacks especially dangerous is the real-time cloning capability. Scammers can now generate live responses during the call, allowing them to carry on a full conversation without detection. This creates a level of trust that standard scams never had and makes voice cloning fraud one of the most advanced threats in the modern scam landscape.

How an AI Voice Cloning Scam Works

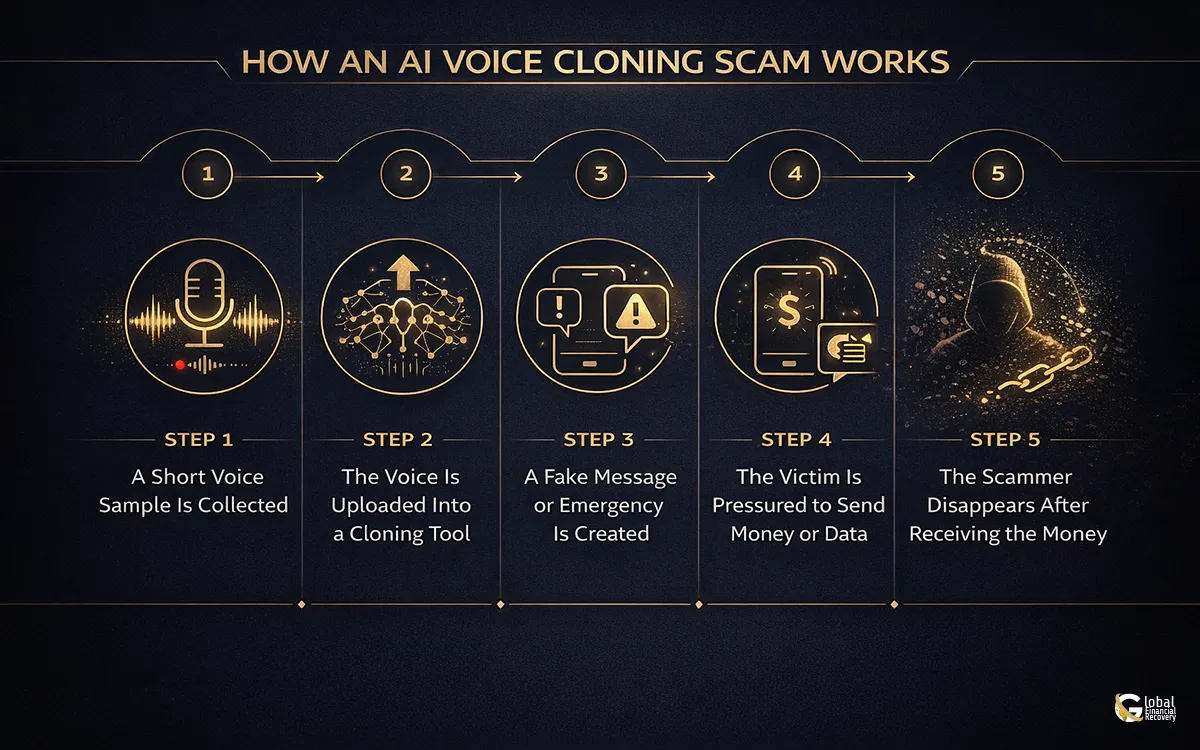

AI voice cloning scams follow a simple pattern. Here’s how scammers use cloned voices step by step to pressure victims into sending money.

1. A Short Voice Sample Is Collected

The scammer starts by gathering a small audio clip of the target. This often comes from social media posts, video content, voicemail greetings, or voice notes. Only a few seconds of clear speech are needed. The purpose is to capture enough voice data to build a realistic clone.

2. The Voice Is Uploaded Into a Cloning Tool

Next, the scammer uploads the audio to an AI voice‑cloning program. The tool analyzes tone, pitch, rhythm, and pronunciation. The software automatically creates a digital “voice model” that sounds nearly identical to the real person. No technical skill is required to do this.

3. A Fake Message or Emergency Is Created

When the clone is prepared, the scammer will create a message that will cause pressure. They can pretend to be a relative, friend, a colleague, a boss, or a service provider. Such themes are emergencies, account problems, financial issues, or urgent requests. The idea is to ensure the victim responds fast and does not bother to check the case.

4. The Victim Is Pressured to Send Money or Data

The cloned voice is then used to demand payment or sensitive information. Scammers commonly request

- bank transfers

- crypto payments

- gift card codes

- verification codes

- personal or financial details

If the victim hesitates, more cloned messages are sent to increase urgency. Excuses such as “the transfer failed” or “the bank needs it again” are used to continue the scam.

5. The Scammer Disappears After Receiving the Money

After one payment or information is received, the scammer ceases to reply. They come back later in other cases with a different number, or they come back with the same cloned voice, trying other scams. Due to the irreversibility of many payment methods (in particular, crypto and gift cards), the victim rarely recovers the money.

The success of AI voice cloning scams is due to the fact that they are targeting two powerful emotions: fear and love. Scammers understand that no one wants to run the risk of ignoring a call for help from someone they care about. It is precisely that emotional reflex that they take advantage of.

The trick is to know the steps and be able to detect the scam before it strikes you and prevent the fraud before the money is transferred out of your wallet.

How to Spot AI Voice Cloning Scams

AI voice cloning scams are becoming harder to detect because cloned voices sound almost identical to real people. But there are still clear warning signs that help you separate a real call from a manipulated one. The table below breaks down the most common red flags so you can quickly identify when a voice may be fake.

|

Red Flag |

What It Looks Like |

Why It’s Suspicious |

|

Unusual urgency |

Caller demands fast action, immediate payments, or instant decisions. |

Scammers rely on panic to stop victims from verifying the call. |

|

Request for money or sensitive data |

The caller asks for bank transfers, crypto, gift cards, or one‑time codes. |

Legitimate organizations never make urgent financial requests by voice. |

|

Voice sounds slightly off |

Tone, emotion, pace, or pronunciation feels “flat,” robotic, or inconsistent. |

AI voices often miss natural emotion, breathing, and pacing. |

|

Call from an unknown or spoofed number |

Caller ID looks unfamiliar or displays the name of a known contact. |

Numbers can be easily faked using caller ID spoofing tools. |

|

Background noise mismatch |

The environment sounds unnatural or completely silent during a “stressful” situation. |

AI-generated audio often lacks normal environmental sounds. |

|

The caller avoids video or in‑person verification |

The caller refuses a video call or ends the call when asked to confirm identity another way. |

Scammers cannot replicate faces in real time during a live video. |

|

Repeated requests or changing instructions |

The caller keeps adding new complications: “send again,” “bank issue,” “verification needed.” |

This pattern aligns with typical social‑engineering pressure tactics. |

|

The message doesn't match the person’s habits |

The caller uses unfamiliar phrases, tone, or communication style. |

Voice may match, but behavior often doesn’t. |

Spotting even one of these red flags should be enough to pause, verify, and protect yourself before taking any action.

How to Protect Yourself From AI Voice Cloning Fraud

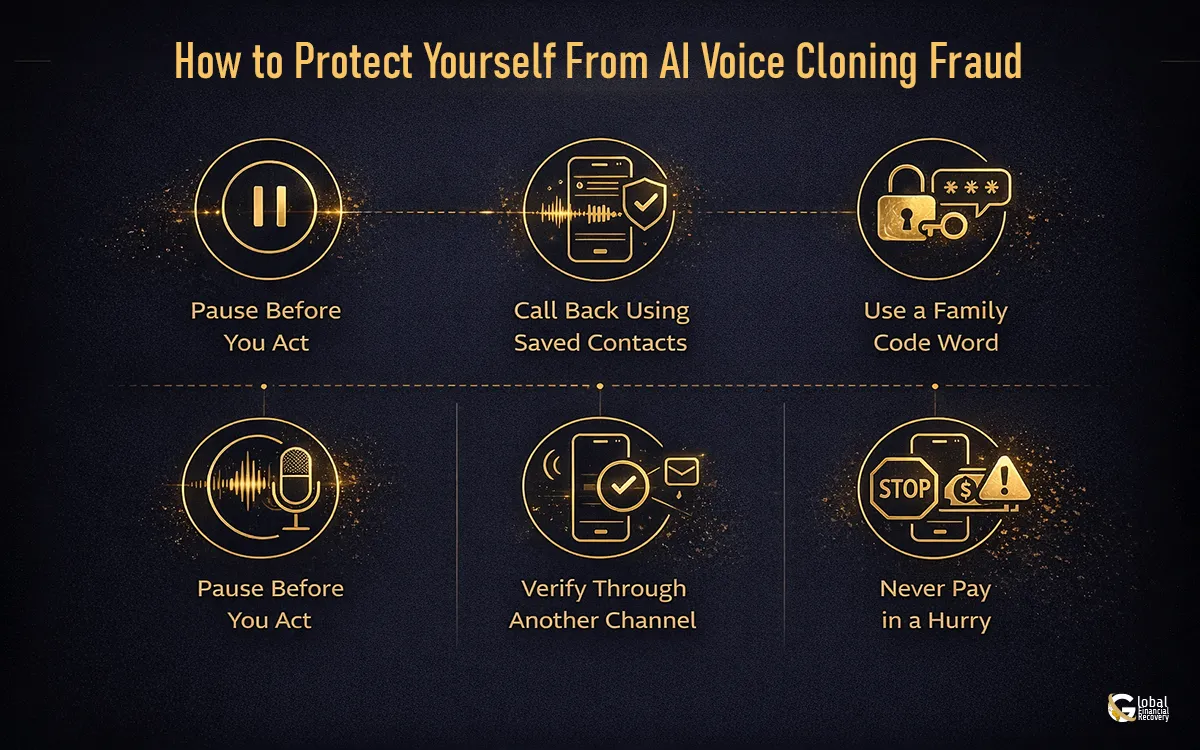

Protecting yourself from AI voice-cloning scams starts with slowing down. Scammers rely on fear and urgency to prompt a rapid reaction. If you receive a call or voice note that sounds alarming, even if it sounds exactly like someone you love, pause before taking action. Call the real person back using the number saved in your contacts. That one step alone can stop most voice-phishing attempts.

Another strong layer of protection is creating a family code word. This is a private phrase shared only with trusted individuals, such as “gold umbrella” or “midnight apple.” If someone calls claiming to be in danger or asks for money, ask for the code word. Scammers won’t know it, even with a perfect cloned voice. Families can also set variations: one code word for emergencies and another for verifying identity.

You also need to restrict the amount of your voice that is publicly available. The attacks involving voice cloning usually begin with TikTok, Instagram Reel, or YouTube videos of short clips or even old voicemails. Change your privacy settings on social media and do not leave long videos public. If you need to post content, keep your voice clips short and avoid long speeches; this will limit the data scammers can work with.

In case of doubt, always check in another channel. In case a person calls requesting money, do not send them anything before you know: a text message to the actual person, a call to another relative, or even a video call request. Scammers panic when you start verifying, because they can’t match visual proof.

Take care over methods of payment. Any demand for funds using crypto, wire transfers, gift cards, or instant payment apps must be met with instant suspicion, more so when accompanied by emotional coercion. There is always a way to prove legitimate emergencies. Actually existing institutions do not require secret payments.

Lastly, educate the elderly in the family, the teens, and anyone susceptible to these scams. The greater the knowledge of how AI voice cloning can be made, the more the criminals face difficulties manipulating the fear. The most potent weapons that you can use to safeguard your funds and even your sanity against deepfake voice fraud are staying composed, checking identity, and not paying in a hurry.

Your Final Defense Against Deepfake Voice Fraud

Deepfake voice scams are becoming more advanced, but staying informed is your best defense. Remember: a real emergency can always be verified, and genuine loved ones will never pressure you into secrecy or rushed payments. Take a moment to confirm before acting that a pause can save thousands of dollars.

If you’ve been affected by an AI voice scam or another form of digital fraud, Global Financial Recovery is here to help. We work with victims worldwide to review the case, gather evidence, and assist in potential recovery efforts. You don’t have to face this alone.

FAQs (Frequently Asked Questions)

Yes. Most modern tools need only 3–10 seconds of audio from social media, voicemails, or public videos. Longer clips create even more realistic deepfake voices.

Pay attention to behavior, not just the voice. If the caller is unusually urgent, won’t answer personal questions, refuses a video call, or demands money immediately, verify by calling the real person back.

Because urgency stops you from thinking clearly. Scammers use panic stories like accidents or legal trouble to cut off your logical reasoning and force instant action.

It depends on how fast you act. Crypto, gift cards, and wire transfers are difficult to reverse, but reporting immediately helps investigators trace funds and build a recovery case.

Use a family code word, reduce long public voice clips, strengthen social media privacy, and remind everyone not to act on sudden money requests without verifying through another channel.